A new dimension in test data analysis through AI: How ddm hopt+schuler and Monolith AI are uncovering hidden potential in test data

Artificial intelligence is not only revolutionizing the software world—it is also opening entirely new possibilities in mechanical engineering and industrial quality assurance.

One of the most innovative companies in this space is Monolith AI, a young tech firm from the UK that specializes in AI-powered analysis of test and development data.

What does Monolith AI actually do?

Monolith AI was founded by Dr. Richard Ahlfeld, who, during his research at Imperial College London, realized a major untapped opportunity: the vast volumes of test data generated daily in industry are often underutilized.

His mission was to harness this data using AI to support concrete, data-driven decision-making in engineering—faster, more precise, and more efficient.

Today, Monolith AI works with leading manufacturers around the world, bringing data-driven innovation into technical product development.

Putting AI to work at ddm hopt+schuler

We saw the potential of this approach and launched a joint pilot project to explore how our digital measurement data from production could be leveraged more effectively with AI. The goal: to detect deviations and patterns early—those that indicate a need for optimization—long before traditional systems might catch them.

In one of our high-volume switch series, we analyzed our functional tests using Monolith AI's technology.

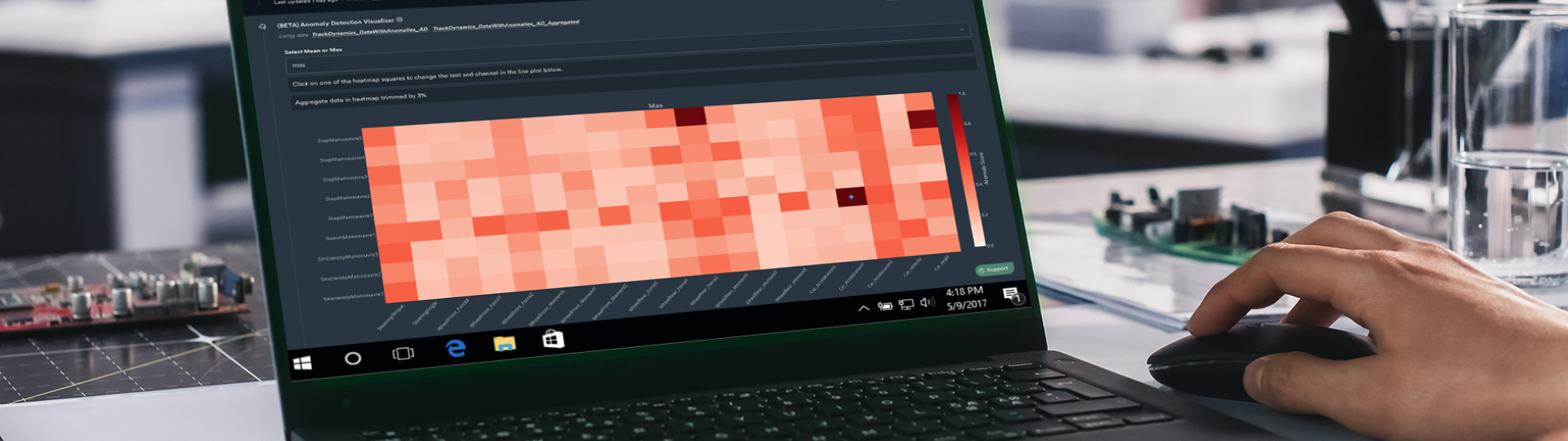

Monolith AI’s anomaly detection feature leverages a proprietary, industry-tested algorithm to identify over 90% of test data errors quickly. It detects various error types across hundreds of data channels—both individual and complex multivariate issues—within seconds.

The objective wasn’t merely to document test results, but to actively scan for patterns that could signal future deviations or emerging quality risks—patterns that would remain hidden using conventional methods.

From data collection to pattern recognition

The foundation of the analysis was a dataset of roughly 500,000 test records, each tagged with a result of either “OK” or “not OK.” The focus was on physical parameters such as switch-on angle, contact resistance, and torque.

Monolith AI’s models were able to detect characteristic signals within the “not OK” datasets. When those same patterns were applied to the “OK” data, an additional 0.1% of parts were flagged as anomalous—detecting an additional 1,000 defective switches per million —despite having previously passed all standard tests.

We selected this particular high-volume switch series for its ideal conditions for data-driven analysis. Even small optimizations here can have a substantial impact. As a result, we were able to significantly increase the efficiency of our testing systems.

Results that add real value

We were particularly impressed by two aspects:

- First, how quickly the AI was able to extract new insights from existing data—insights that traditional evaluations had missed.

- Second, the highly collaborative and practical working relationship with the Monolith AI team, who supported us every step of the way.

Opportunities and challenges for SMEs

This new testing methodology enables us to not only identify clearly faulty components, but also to spot early indicators of potential issues—long before they might lead to failures in the field. For a mid-sized company like ours, this represents a genuine competitive edge in terms of quality assurance.

The biggest challenge lies not so much in the technology itself, but in building the necessary knowledge and processes. AI requires a shift in mindset—from traditional inspection to proactive data utilization.

Looking forward

The results speak for themselves. We now plan to integrate this intelligent pattern recognition approach from the outset in new projects—as a core element of our modern quality assurance strategy. Because when data is used correctly, it doesn’t just reveal problems—it reveals potential.